System Design Mastery: Architecting Hyper-Scale Solutions for the Meta Interview

For aspiring Senior Engineering leaders targeting top-tier tech companies like Meta, Google, or Amazon, the System Design interview is the ultimate test. It’s not about recalling syntax or solving a single algorithm; it’s about demonstrating the architectural mindset required to build reliable, high-performing services that handle traffic at global, hyper-scale levels. This interview evaluates your ability to manage complexity, make crucial trade-offs, and master Distributed Systems principles.

Success in this crucial stage hinges on more than just technical knowledge. It requires a structured communication strategy, a clear focus on Scalability, and a deep understanding of why certain architectural choices are made over others. A typical design question—like “Design Facebook’s News Feed” or “Design a globally Distributed Systems database”—demands you think like a Chief Architect, balancing speed, cost, and complexity.

This comprehensive guide dives deep into the fundamentals and advanced tactics for mastering System Design interviews, focusing specifically on the level of detail and rigorous trade-off analysis expected from Senior Engineering candidates architecting solutions designed for extreme Scalability and resilience.

I. The Four Pillars of System Design and Distributed Systems

Every complex System Design problem is governed by four fundamental pillars that drive all subsequent architectural decisions, particularly in Distributed Systems:

- Availability: The probability that the system is operational at a given time. High availability is crucial for user-facing services (e.g., 99.999% uptime).

- Latency (Performance): The time delay between a user request and the system’s response. Low latency is essential for good user experience and is optimized through caching and CDNs.

- Scalability: The ability of the system to handle increased load (users, data, or transactions) efficiently by adding resources. This is arguably the most tested pillar for Senior Engineering roles.

- Durability (Reliability/Fault Tolerance): The ability of the system to operate continuously despite component failures, ensuring data is not lost. This relies heavily on redundancy in Distributed Systems.

A successful System Design answer begins with clarifying which of these pillars are the primary constraints for the given problem (e.g., Designing a banking system prioritizes durability and consistency; designing a video streaming service prioritizes availability and low latency).

II. The Structured Approach: Interview Strategy for System Design

The biggest failure in a System Design interview is often lack of structure. Senior Engineering candidates must guide the conversation through a deliberate sequence of steps:

- Understand Requirements & Scope: Never start drawing boxes immediately. Clarify functional requirements (what the system must do) and non-functional requirements (the system’s performance goals).

- Estimation and Constraints: Prove you can handle Scalability. Estimate key metrics: daily active users (DAU), read/write ratio, QPS (queries per second), and storage requirements (often in petabytes). This sets the scale—e.g., knowing you need 100,000 QPS tells the interviewer you must use Distributed Systems.

- High-Level Design (The Boxes and Arrows): Draw the basic components (Clients, Load Balancer, API Gateway, Services, Database/Data Store). Discuss the overall flow.

- Deep Dive (The Hard Parts): Focus 1-2 major architectural decisions: the database choice (SQL vs. NoSQL), the caching strategy, or the sharding scheme. This is where the Senior Engineering depth is demonstrated.

- Trade-offs and Iteration: Explicitly state trade-offs (e.g., choosing eventual consistency over strong consistency for better Scalability). Address potential failures (fault tolerance) and bottlenecks.

III. Mastering Scalability: From Monolith to Hyper-Scale Distributed Systems

Scalability is the heart of the System Design interview. You must demonstrate proficiency across several strategies crucial for handling massive traffic in Distributed Systems.

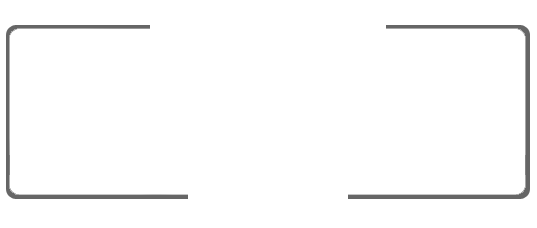

Horizontal Scaling and Sharding

As a Senior Engineering candidate, you must know that the simplest way to improve Scalability is moving from vertical scaling (more powerful single machine) to horizontal scaling (many smaller machines). This introduces the need for sharding—partitioning data across multiple databases (shards).

- Sharding Key: The method by which data is split. Discuss the difference between Range-based (risks hotspots) and Hashing-based (better distribution) sharding.

- Replication and Redundancy: To ensure high availability and durability in Distributed Systems, data must be replicated. Distinguish between Leader-Follower (Master-Slave) and Peer-to-Peer replication models, and discuss how they affect write Scalability and read performance.

- Consistent Hashing: A crucial concept for optimizing horizontal Scalability. It minimizes data movement when adding or removing nodes (shards), improving the resilience of the Distributed Systems architecture.

- CDN (Content Delivery Network): For static content, reducing load on origin servers and geographical latency.

- Application/Server-Side Caching (e.g., Redis/Memcached): For frequently accessed dynamic data. Discuss the trade-off of cache eviction policies (LRU, LFU) versus cache invalidation complexity.

- Read/Write Patterns: Use cases for Cache-Aside, Write-Through, and Read-Through patterns, demonstrating expertise beyond simple component inclusion.

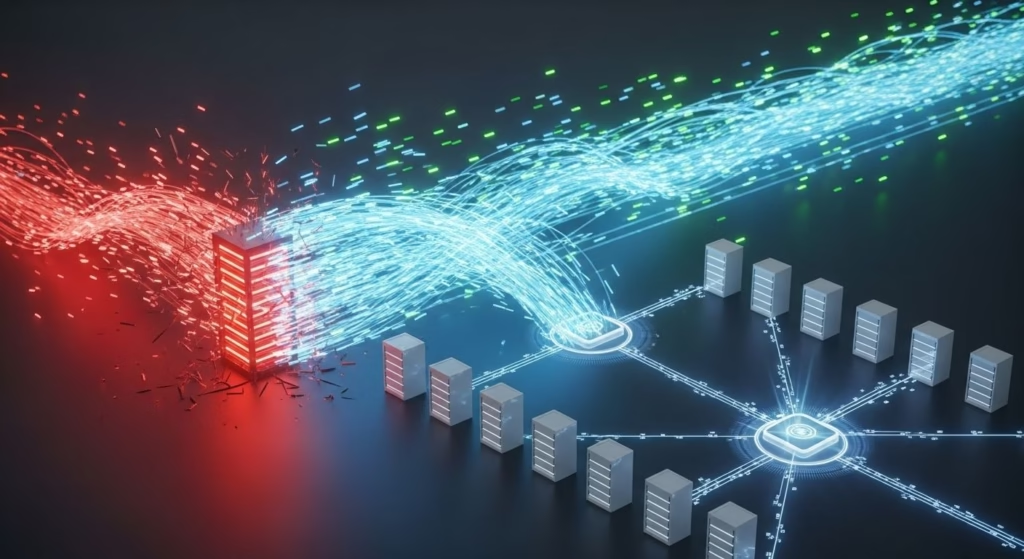

- Strong Consistency (CP systems): All replicas must agree on data before a write is confirmed (e.g., RDBMS, ZooKeeper). Used when data integrity is paramount (e.g., financial transactions). Limits Scalability.

- Eventual Consistency (AP systems): Writes are accepted quickly and replicated asynchronously (e.g., Cassandra, DynamoDB). Favored for high Scalability and availability (e.g., social media feeds, recommendations) where temporary inconsistencies are acceptable.

- Load Leveling: Queues absorb unexpected traffic spikes, preventing backend services from crashing (crucial for maintaining Availability).

- Service Decoupling: Isolating services ensures that the failure of one component (e.g., the notification service) does not cascade and take down the core API service. This is fundamental for robust Distributed Systems.

- Relational Databases (PostgreSQL/MySQL): Best for transactional data requiring strong consistency and complex joins. Used sparingly for high-read scenarios due to Scalability limits.

- Key-Value Stores (DynamoDB/Cassandra): Ideal for massive Scalability and high-speed reads/writes with simple key lookups (e.g., storing user sessions, metadata). Excellent AP performance for Distributed Systems.

- Graph Databases (Neo4j): Essential for modeling relationships (e.g., friend networks on social media). Discuss the trade-off: great for complex queries, but challenging for global Scalability.

- Search Indexes (Elasticsearch): Used for rapid full-text search capabilities, separating the search burden from the primary database.

- Handling Fanout: The massive write amplification common in systems like Twitter or Instagram (where one write leads to millions of read queries). Solutions involve shifting work from read-heavy (pull model) to write-heavy (push model, fanout-on-write) for better read Scalability.

- Data Centers and Regionalization: Discuss multi-region deployment for disaster recovery and geographic load balancing, reducing latency for global users. This necessitates solving cross-region data synchronization, a hallmark of advanced Distributed Systems design.

- Monitoring and Observability: At hyper-scale, failure is inevitable. Detail how you would implement logging, metrics, and tracing (the “three pillars of observability”) to quickly identify and mitigate bottlenecks and failures in the complex web of Distributed Systems.

- Microservices vs. Monolith: Explain the pros and cons of breaking down the system into microservices. While microservices boost engineering velocity and Scalability, they drastically increase operational complexity—a critical trade-off a Senior Engineering leader must understand.

Caching Strategies for Latency and Scalability

Caching is the single most effective way to improve read latency and system Scalability. Candidates should discuss multi-layered caching schemes:

IV. Advanced Distributed Systems Concepts for Senior Engineering

The jump to Senior Engineering means moving past basic components and into complex coordination and resilience mechanisms within Distributed Systems.

The CAP Theorem and Consistency Models

In the context of Distributed Systems, the CAP theorem states a system can only satisfy two out of three guarantees: Consistency (C), Availability (A), and Partition Tolerance (P). Since large-scale systems operating over a network must ensure Partition Tolerance, the core trade-off becomes C vs. A.

A high-quality System Design answer will explicitly state this choice and defend the consistency model based on the use case.

Asynchronous Processing and Decoupling

Many operations in hyper-scale applications (video processing, email notifications, batch jobs) don’t need immediate results. Using message queues (Kafka, RabbitMQ) is vital for improving Scalability and resiliency:

V. Design Deep Dive: Selecting Data Stores for Scale

The choice of database is never “one size fits all.” Senior Engineering candidates must propose a polyglot persistence strategy, tailoring the data store to the specific data type and access pattern required for hyper-scale System Design.

When presenting your design, explicitly map each data store component to its function (e.g., “We will use Cassandra for the user timeline data due to its high write throughput, and Postgres for financial transaction logs due to the need for strong consistency”).

VI. Hyper-Scale Architecting: Beyond the Basics

To truly achieve mastery, a Senior Engineering candidate must address the complexities inherent to global, massive Distributed Systems:

Conclusion: Earning the Senior Engineering Title

The Meta System Design interview is designed to test your maturity as an architect. It’s not about finding the single “right” answer, but about demonstrating a reasoned, step-by-step methodology that balances the competing constraints of Scalability, availability, and complexity inherent in large Distributed Systems. By rigorously structuring your answers using requirements gathering, quantitative estimation, deliberate architectural choices, and explicit trade-off discussions, you prove that you possess the mastery required of a Senior Engineering leader capable of architecting hyper-scale solutions.